Face Recognition: An Introduction

December 5, 2010 15 Comments

As the necessity for higher levels of security rises, technology is bound to swell to fulfill these needs. Any new creation, enterprise, or development should be uncomplicated and acceptable for end users in order to spread worldwide. This strong demand for user-friendly systems which can secure our assets and protect our privacy without losing our identity in a sea of numbers, grabbed the attention and studies of scientists toward what’s called biometrics.

Biometrics is the emerging area of bioengineering; it is the automated method of recognizing person based on a physiological or behavioral characteristic. There exist several biometric systems such as signature, finger prints, voice, iris, retina, hand geometry, ear geometry, and face. Among these systems, facial recognition appears to be one of the most universal, collectable, and accessible systems.

Biometric face recognition, otherwise known as Automatic Face Recognition (AFR), is a particularly attractive biometric approach, since it focuses on the same identifier that humans use primarily to distinguish one person from another: their “faces”. One of its main goals is the understanding of the complex human visual system and the knowledge of how humans represent faces in order to discriminate different identities with high accuracy.

The face recognition problem can be divided into two main stages: face verification (or authentication), and face identification (or recognition).

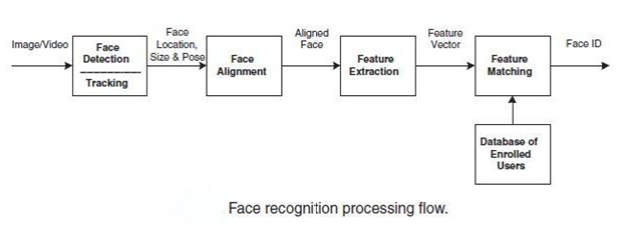

The detection stage is the first stage; it includes identifying and locating a face in an image.

The recognition stage is the second stage; it includes feature extraction, where important information for discrimination is saved, and the matching, where the recognition result is given with the aid of a face database.

Several face recognition methods have been proposed. In the vast literature on the topic there are different classifications of the existing techniques. The following is one possible high-level classification:

• Holistic Methods: The whole face image is used as the raw input to the recognition system. An example is the well-known PCA-based technique introduced by Kirby and Sirovich, followed by Turk and Pentland.

• Local Feature-based Methods: Local features are extracted, such as eyes, nose and mouth. Their locations and local statistics (appearance) are the input to the recognition stage. An example of this method is Elastic Bunch Graph Matching (EBGM).

Although progress in face recognition has been encouraging, the task has also turned out to be a difficult endeavor. In the following sections, we give a brief review on technical advances and analyze technical challenges.

History

Automated face recognition is a relatively new concept. Developed in the 1960s, the first semi-automated system for face recognition required the administrator to locate features ( such as eyes, ears, nose, and mouth) on the photographs before it calculated distances and ratios to a common reference point, which were then compared to reference data. In the 1970s, Goldstein, Harmon, and Lesk used 21 specific subjective markers such as hair color and lip thickness to automate the recognition. The problem with both of these early solutions was that the measurements and locations were manually computed. In 1988, Kirby and Sirovich applied principle component analysis, a standard linear algebra technique, to the face recognition problem. This was considered somewhat of a milestone as it showed that less than one hundred values were required to accurately code a suitably aligned and normalized face image. In 1991, Turk and Pentland discovered that while using the eigenfaces techniques, the residual error could be used to detect faces in images; a discovery that enabled reliable real-time automated face recognition systems. Although the approach was somewhat constrained by the environmental factors, the nonetheless created significant interest in furthering development of automated face recognition technologies. The technology first captured the public’s attention from the media reaction to a trial implementation at the January 2001 Super Bowl, which captured surveillance images and compared them to a database of digital mugshots. This demonstration initiated much-needed analysis on how to use the technology to support national needs while being considerate of the public’s social and privacy concerns. Today, face recognition technology is being used to combat passport fraud, support law enforcement, identify missing children, and minimize benefit/identity fraud.

Overview

As one of the most successful applications of image analysis and understanding, face recognition has recently gained significant attention. Over the last ten years or so, it has become a popular area of research in computer vision and one of the most successful applications of image analysis and understanding.

Some examples of face recognition application areas are:

| Enterprise Security | Computer and physical access control |

| Government Events Criminal | Terrorists screening; Surveillance |

| Immigration/Customs | Illegal immigrant detection;

Passport/ ID Card authentication |

| Casino | Filtering suspicious gamblers /VIPs |

| Toy | Intelligent robotic |

| Vehicle | Safety alert system based on eyelid movement |

The largest face recognition systems in the world with over 75 million photographs that is actively used for visa processing operates in the U.S. Department of State.

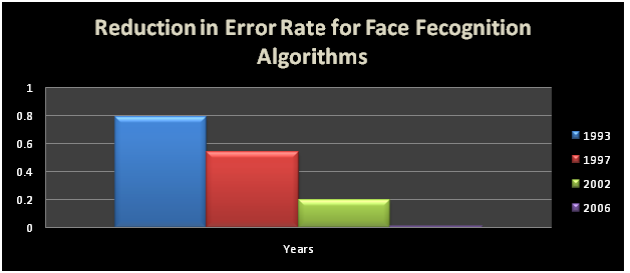

In 2006, the performance of the latest face recognition algorithms was evaluated in the Face Recognition Grand Challenge. High-resolution face images, 3-D face scans, and iris images were used in the tests. The results indicated that the new algorithms are 10 times more accurate than the face recognition algorithms of 2002 and 100 times more accurate than those of 1995. Some of the algorithms were able to outperform human participants in recognizing faces and could uniquely identify identical twins.

Weaknesses vs. Strengths

Among the different biometric techniques facial recognition may not be the most reliable and efficient but it has several advantages over the others: it is natural, easy to use and does not require aid from the test subject. Properly designed systems installed in airports, multiplexes, and other public places can detect presence of criminals among the crowd. Other biometrics like fingerprints, iris, and speech recognition cannot perform this kind of mass scanning. However, questions have been raised on the effectiveness of facial recognition software in cases of railway and airport security.

Critics of the technology complain that the London Borough of Newham scheme has never recognized a single criminal, despite several criminals in the system’s database living in the Borough and the system having been running for several years. “Not once, as far as the police know, has Newham’s automatic facial recognition system spotted a live target.”

Despite the successes of many systems, many issues remain to be addressed. Among those issues, the following are prominent for most systems: the illumination problem, the pose problem, scale variability, images taken years apart, glasses, moustaches, beards, low quality image acquisition, partially occluded faces etc. Figures below show different images which present some of the problems encountered in face recognition. An additional important problem, on top of the images to be recognized, is how different face recognition systems are compared.

The illumination problem is illustrated in the following figure, where the same face appears differently due to the change in lighting. More specifically, the changes induced by illumination could be larger than the differences between individuals, causing systems based on comparing images to misclassify the identity of the input image.

The pose problem is illustrated in the following figure, where the same face appears differently due to changes in viewing condition. The pose problem has been divided into three categories: (1) the simple case with small rotation angles, (2) the most commonly addressed case, when there is a set of training image pairs (frontal and rotated images), and (3) the most difficult case, when training image pairs are not available and illumination variations are present.

Face Recognition Process

Regardless of the algorithm used, facial recognition is accomplished in a five step process.

1. Image acquisition:

Image acquisition can be accomplished by digitally scanning an existing photograph or by using an electro-optical camera to acquire a live picture of a subject. Video can also be used as a source of facial images. The most existing facial recognition systems consist of a single camera. The recognition rate is relatively low when face images are of various pose and expression and different illumination. With increasing of the pose angle, the recognition rate decreases. The recognition rate decreases greatly when the pose angle is larger than 30 degrees. Different illumination is not a problem for some algorithms like LDA that can still recognize faces with different illumination, but this is not true for PCA. To overcome this problem, we can generate the face images with frontal view (or little rotation), moderate facial expression, and same illumination if PCA algorithm is being used.

2. Image Preprocessing:

Face recognition algorithms have to deal with significant amounts of illumination variations between gallery and probe images. For this reason, image preprocessing algorithm that compensates for illumination variations in images is used prior to recognition. The images used are gray scaled.

Histogram equalization is used here to enhance important features by modifying the contrast of the image, reducing the noise and thus improving the quality of an image and improving face recognition. It is usually done on too dark or too bright images.

The idea behind image enhancement techniques is to bring out detail that is obscured, or simply to highlight certain features of interest in an image. Images are enhanced to improve the recognition performance of the system.

3. Face Detection:

Face detection is a computer technology that determines the locations and sizes of human faces in arbitrary images. It detects facial features and ignores anything else, such as buildings, trees and bodies. Face detection can be regarded as a specific case of object-class recognition, a major task in computer vision. Software is employed to detect the location of any faces in the acquired image. Generalized patterns of what a face “looks like” are employed to pick out the faces.

The method devised by Viola and Jones, that is used here, uses Haar-like features. Even for a small image, the number of Haar-like features is very large, for a 24×24 pixel window one can generate more than 180000 features.

AdaBoost is used to train a classifier, which allows for a feature selection. The final classifier only uses a few hundred Haar-like features. Yet, it achieves a very good hit rate with a relatively low false detection rate.

4. Feature Extraction

This module is responsible for composing a feature vector that is well enough to represent the face image. Its goal is to extract the relevant data from the captured sample. Feature extraction is divided into two categories, the holistic feature category and the local features category. Local feature based approaches try to automatically locate specific facial features such as eyes, nose and mouth based on known distances between them. The holistic feature category deals with the input face image as a whole.

Different methods are used to extract the identifying features of a face. The most popular method is called Principle Components Analysis (PCA), which is commonly referred to as the eigen face method. Another method used here is called Linear Discriminant Analysis (LDA), which is referred to as the fisher face method. Both LDA and PCA algorithms belong to the holistic feature category.

Template generation is the result of the feature extraction process. A template is a reduced set of data that represents the unique features of an enrollee’s face consisting of weights for each image in the database. The projected space can be seen as a feature space where each component is seen as a feature.

5. Declaring a match

The Last step is to compare the template generated in step four with those in a database of known faces. In an identification application, the biometric device reads a sample and compares that sample against every record or template in the database, this process returns a match or a candidate list of potential matches that are close to the generated templates in the database. In a verification application, the generated template is only compared with one template in the database that of the claimed identity, which is faster.

Closest match is found by using the Euclidean distance which finds the minimum difference between the weights of the input image and the set of weights of all images in the database.

Welcome to Ali's blog, A computer engineer & scientist specialized in software development and algorithms.

Welcome to Ali's blog, A computer engineer & scientist specialized in software development and algorithms.

You must be logged in to post a comment.